Insight into the Mind of GPT-4: Playing Go together

I won handily -- but still think it might soon surpass human play. And it can discuss the game reasonably!

After reading on Hacker News that GPT-4 had beaten someone (not sure how strong they were) at chess, I of course wondered if it would be able to play Go convincingly.

Go has a nice format called SGF, which is well documented and of which there were apparently sufficient instances in GPT4’s training data for it to immediately recognize and be conversant with.

Update Mar 24 — based on some experimentation (which you can see at the end of the article) it seems like GPT-4 has no “spatial” awareness of the go board whatsoever, but is simply (or is it actually simple?) predicting the next move based on the moves that came before. This is sort of what you’d expect it to do, I guess, but oversimplifies the internal representation it has developed about “correctness” and “goodness” of play.

I.e. is it not playing from first principles but rather the purest version of imitation of the corpus of SGF files it has been trained on? Given that it’s actually passable and at any rate coherent, having been trained on all the SGFs it encountered in its training set, including very low-level play and I’m sure gobbledygook, I wonder how it would end up faring if it was just trained on, say, AlphaGo Zero game records alone? But then it might lose its ability to comment on the game itself, since presumably that ability derives from commented SGFs in the wild. Hmm, maybe then it needs to be trained on a set of professional, commented SGFs? Interesting…

So, when I asked it to play with me using SGF, it was off to the races!

Given the complexity of Go, after the first opening moves, once fighting begins, it becomes exceedingly rare that the same game has ever been played before. Therefore, we can be pretty certain GPT4 is indeed the one playing (as opposed to parroting a previous game it has seen)

Here is the game after 12 moves:

Black is me, Ethan, White is GPT4

Looks totally reasonable! So it passes the basic coherence test. The two attached stones on the bottom are somewhat atypical, but I don’t think they’re bad per se. (I’m about 3k, so what do I know?)

Then begins an attack in the top right corner, which goes poorly for white, but I don’t think was totally unreasonable. Katago analysis suggests I made several poor moves open to exploitation myself.

At this point, I chatted to GPT-4:

I'm sorry, I may be being overly aggressive: I wasn't sure how strong you would be. I think I may kill your group on the side, which was my intention since it's within my base of influence from the sanrensei. I think a better approach for you would have been to extend along the side rather than play the empty triangle attempting to kill my two stones in the corner.

To which GPT-4 responded:

It's fine to play aggressively! After all, the game of Go is about territory and capturing stones. Your move at F16 is indeed a strong move. I'll try to salvage the situation as best as I can.

(Note, its coordinant system aside from the format of SGF was apparently rotated / mirrored) from the SGF record)

I tried exploring how GPT-4 “sees” Go, whether it’s viewing the game as a list at the end of which it makes a move, or rather if it has some form of spatial awareness which would allow it to represent the board in other forms, perhaps by showing a board state as a grid, rather than using the SGF format:

(the bottom of this message is cut off in the above screenshot)

A crude system perhaps, but clear enough. However, this prompt produced gobbledygook from GPT-4:

Which includes such delightful points as apparent superpositions between black and white stones, “WB” and “BW”. Clearly a non-starter, and has no discernible relationship to the game record.

I abandoned that experiment. (But took it up again — see the bottom of this post)

The game progressed like this:

With a pretty interesting fight, and a delightful stack of stones in the middle. At one point, GPT-4 decided it had had enough, and for its next move, replaced one of my stones (K10) with its own, like it has done in chess matches.

When I asked it to play a legal move, it responded with move 56. This was the only illegal move it made in the game.

Later, I asked GPT-4 to describe the state of the board in more general terms, and then to write Haiku about each move it made:

How nice! It also can pretty reasonably (albeit over-generally) describe its reasoning and its impression of the flow of play:

This was the real mind-blowing thing for me.

My general impression is that this ability of GPT-4 to play go is an “emergent” ability. i.e. it was not a part of the training data explicitly, and is sort of the same order of jump in abstraction from AlphaGo to AlphaGo Zero in the sense that it has achieved a higher degree of generality.

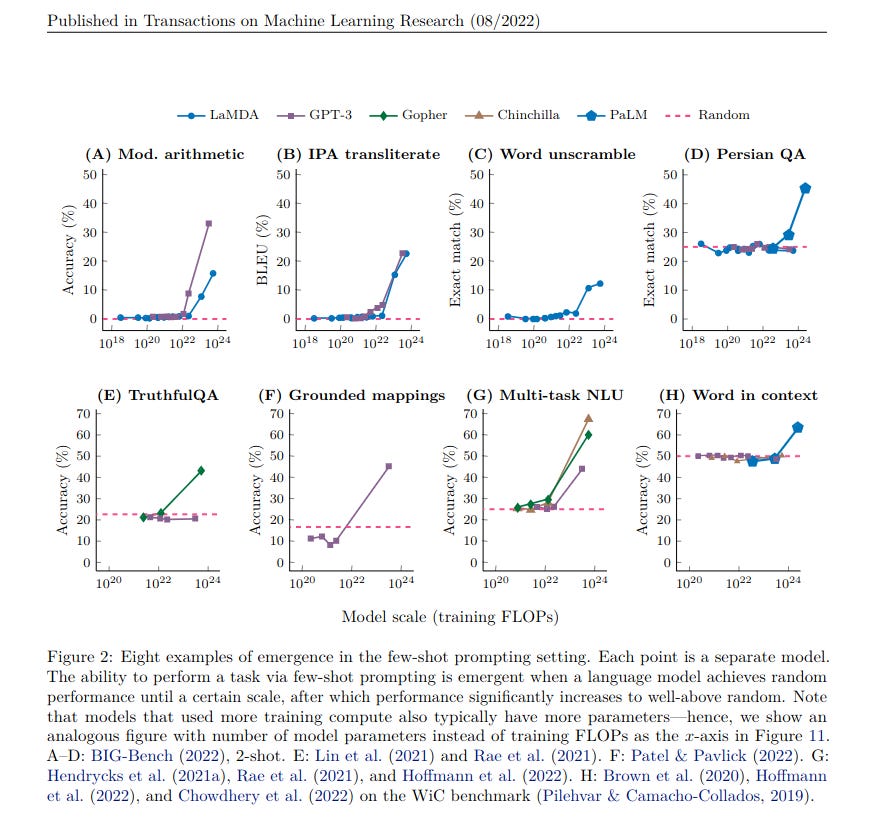

There was a fascinating paper which showed up on Hacker News recently which discusses “emergent abilities” of LLMs, based on model size:

Presumably, we could also have another graph here with ELO rating on the Y-axis and FLOPs on the X-axis.

This, to me, is quite profound. I wonder what this graph would look like in the case of Go! Something also quite parabolic. How does this ability scale with regard to model size? Is it an S-curve? Does it even perhaps surpass known training methods for Go playing networks? That would be heartening, as it would reinforce the notion that an aesthetic and ethical sensibility plays a central role in becoming a masterful player in Go.

What rating would GPT-4 have now? Perhaps something like 15-10k I think. I wonder where that would be when the model size is 10x or 100x? Will it also surpass human players as did AlphaGo? I would love to be able to talk with AlphaGo (or KataGo / Leela Zero) — what are you thinking?? Please analyze my game!

Eventually, the game progressed to a point where I felt it wasn’t worth playing any more. I was interested to see how it handles more complicated fights in the center, and while it generally played decent threats, it let me disconnect its stones and solidify my moyo. I asked GPT-4 its thoughts:

Here is the rest of the game record:

(;FF[4]GM[1]CA[UTF-8]SZ[19]ST[0]

GN[Demo Board]PB[Ethan]PW[GPT4]BR[3p]WR[3p]RE[?]KM[6.5]RU[Japanese]

;B[pd];W[dp];B[pp];W[dd];B[pj];W[jp];B[gc];W[cf];B[jj];W[jq];B[nc];W[oc];B[od];W[ne];B[nd];W[pb];B[qb];W[qc];B[pc];W[qd];B[rb];W[rc];B[qf];W[qe];B[pf];W[pe];B[oe];W[re];B[rf];W[rd];B[gf];W[jl];B[cn];W[fq];B[ck];W[dn];B[dm];W[dr];B[jn];W[jm];B[jo];W[jk];B[hn];W[hl];B[fm];W[gm];B[gn];W[hn];B[go];W[fo];B[ho];W[hp];B[fl];W[gl];B[ji];W[jh];B[gj];W[gi];B[hj];W[gh];B[ih];W[ii];B[ig];W[if];B[hf];W[ie];B[hd];W[id];B[ic];W[ib];B[jc];W[kc];B[kb];W[ce];B[db];W[dc];B[cb];W[cc];B[eb];W[ec];B[jg];W[kg];B[kh];W[ki];B[lh];W[li];B[kj];W[lj];B[lk];W[mk];B[ll];W[ml];B[lm];W[mm];B[mo];W[mp];B[no];W[np];B[oo];W[op];B[pq];W[qp];B[qo];W[ro];B[rp];W[rq];B[qq];W[qr])

UPDATE:

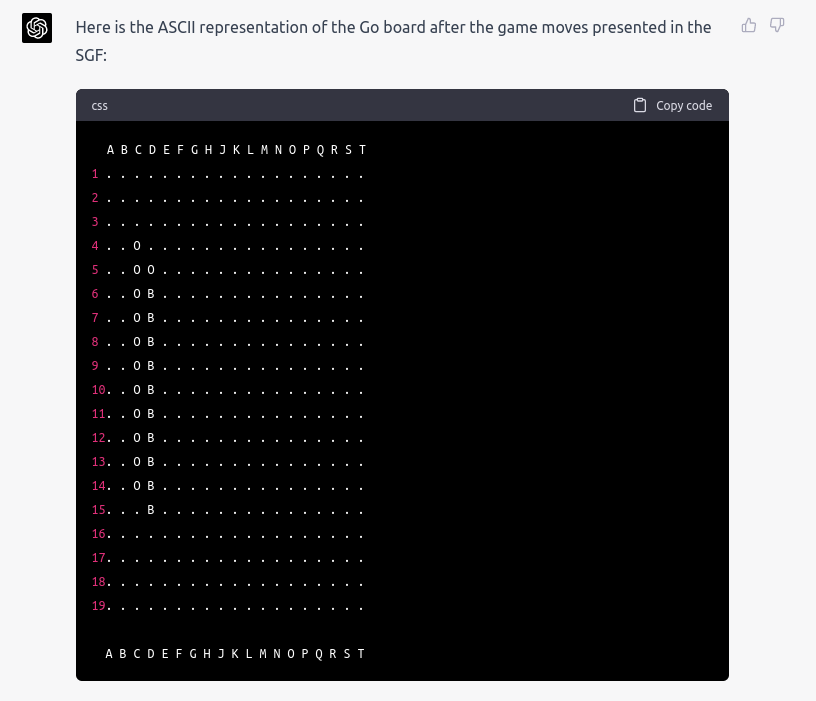

So a commenter Matt was asking if ChatGPT can do Ascii art well at all, and whether it might be able to represent the board state graphically if it was given free reign to do so.

It chose to represent the final board state like this:

I decided that since GPT is so good at writing and understanding code, perhaps we could make a python program together to do this properly.

I asked for some pseudocode:

I asked for complete implementations of the functions, and encountered some bugs, but we worked through them together, to get the following code, which correctly displays (at least the test) SGF files:

So that’s neat!

But providing the code back to a fresh version of GPT and asking it to use the algorithm to output correct representations of the SGF state of the game between us got precisely nowhere. Disappointing! I wonder if there would be a better way to teach it!

From there, I tried sending it the actual console output of the python code, hoping it would be able to compare its result to the real result and learn from it, and it did parrot the input back, but could not extrapolate to other board positions during the game, as here:

import re

def parse_sgf(sgf_string):

sgf_moves = []

move_pattern = re.compile(r"([BW]\[\w{2})\]")

for match in move_pattern.finditer(sgf_string):

move = match.group(1)

sgf_moves.append(move)

return sgf_moves

def sgf_move_format_to_color_and_indices(sgf_move):

color, move = sgf_move.split("[")

col = ord(move[0]) - ord("a")

row = ord(move[1]) - ord("a")

return color, row, col

def display_board(board):

print(" A B C D E F G H J K L M N O P Q R S T")

for i, row in enumerate(board):

print(str(i + 1).rjust(2), end=" ")

for cell in row:

print(cell, end=" ")

print()

print(" A B C D E F G H J K L M N O P Q R S T")

def main(sgf_string):

sgf_moves = parse_sgf(sgf_string)

board = [["." for _ in range(19)] for _ in range(19)]

for move in sgf_moves:

color, row, col = sgf_move_format_to_color_and_indices(move)

board[row][col] = color

display_board(board)

sgf_string = """SGF_STRING_HERE"""

main(sgf_string)

Kind of interesting that it couldn't understand your grid representation of the game state. It probably doesn't fully grok the physical locations of the characters on the screen, and that those locations can be used to convey information.

Is it any good at ascii art? If it can do good, original ascii art then it should also be capable of understanding your grid representation.

Anyway, great read. Hope you post more.